I recently started a new side project, an Ionic-based (Angular) application for workout management. I created the new Ionic project and quickly started working on the Redux implementation. It suddenly dawned on me the similarities between a Redux system and the Event Sourced model I was going to build on the backend.

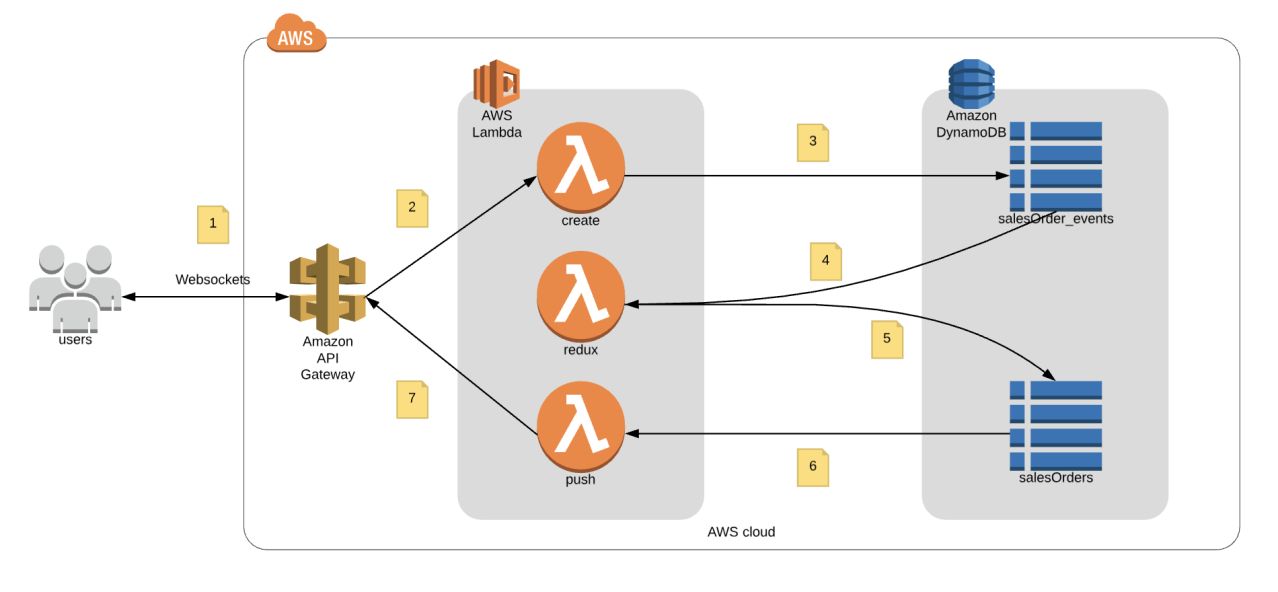

So, I decided to set out to build a Proof of Concept for a Redux-based backend system for managing an Event Sourced model. I wanted to prove a single idea: that Redux could be used to process Events into a client-centric model and done entirely on the server. Basically, could I handle a basic CRUD API using Redux. AWS is being used to test this with Lambda, API Gateway, and DynamoDB. A simple diagram:

In this POC I’m modeling a simple “Sales Order” object. I’m not concerned about actual data fields, just the basic flow of data from client to server and back. Let’s walk through how a Create event would work:

- Connect to API Gateway via a Websocket client. In my case I am using wscat to connect for simple testing. This would normally be a web client or a mobile app.

- API Gateway forwards that message to a lambda function.

- The Lambda writes a new “create” event to the salesOrder_events table.

- DynamoDB stream on the salesOrder_events table triggers a Lambda for managing the data model via Redux. The event is fed as an ‘action’ to the Redux store. Then the state is evaluated to become the “Sales Order” record.

- The Lambda then writes the resulting Redux state to the salesOrder table.

- The DynamoDB stream on the salesOrders table triggers a Lambda which…

- Pushes the new salesOrder back to all websocket clients.

This simple round-trip can be seen here:

Pretty simple, works, and I think proves that yes, this is possible.

But, I wouldn’t do it. Mostly because it’s slow. You can see from the demo that the first call, when things are warming up, is pretty slow. But even subsequent calls is still too slow for most applications. This particular implementation is robust, as it’s highly decoupled, but has too much lag, because of that decoupling. The DynamoDB and Lambda systems are too slow with streams. This is a known trade off. This is the “eventual” part of most “eventual consistency” systems.

But I believe this can be changed to result in a faster system. However, that’s going to come by reducing the decoupling and making one Lambda be responsible for the entire round-trip.

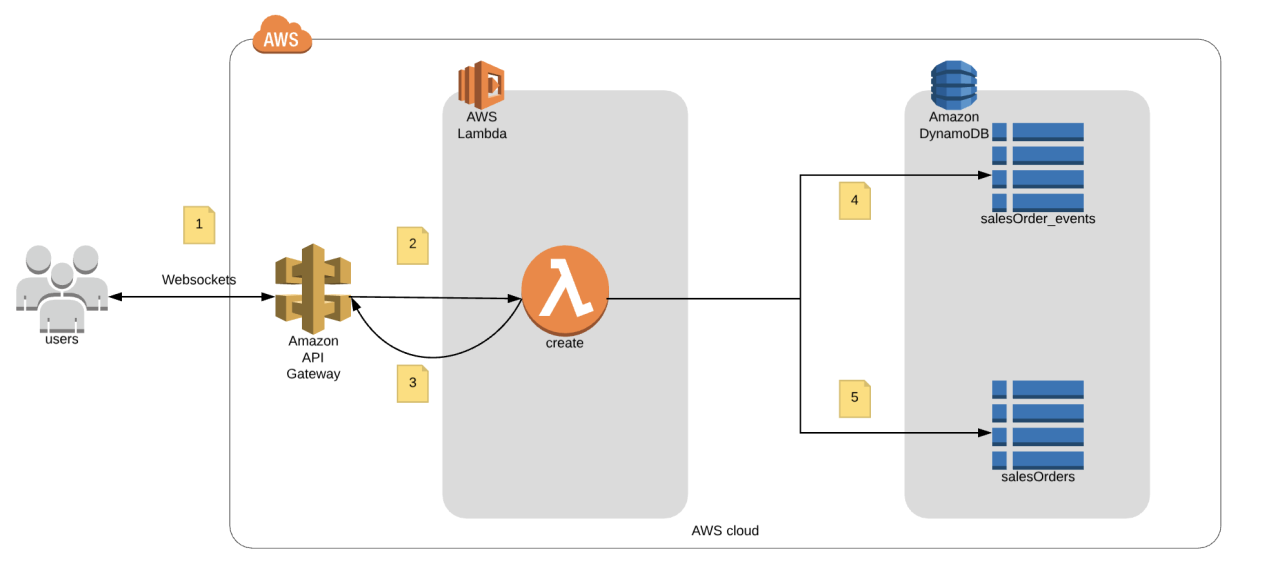

Here’s what that new diagram would look like:

So, a little simpler:

- Connect to API Gateway

- Client sends a new “create” event.

- The Lambda processes the update via Redux and immediately sends it back to the client (this time, just the one that made the request).

- Writes the salesOrder_events record…

- Then the salesOrder record.

We more tightly couple the system, dropping all our logic into one lambda, but it does make things much faster:

So, it seems that having a server-side Redux system is possible. Not only for simple data models, but I think it’ll work for a full application state too. That’ll come next.

What I want to prove next is that this type of backend could be used for a normal Redux store in the UI. The idea being that a middleware added to the store would be responsible for directing the event to either the local store (no network connection) or the server-side store (when there is a connection). Any actions/events written when no network connection would be sent once it was restored, so the backend could always have the state. This could make for a chatty UI. This could lead to high costs and problems as the number of users for an app hits a critical point, but until then I think it’d be beneficial to have those Redux actions on the backend for analysis.